What Is Split Testing And A/B Testing For Personalized Ads?

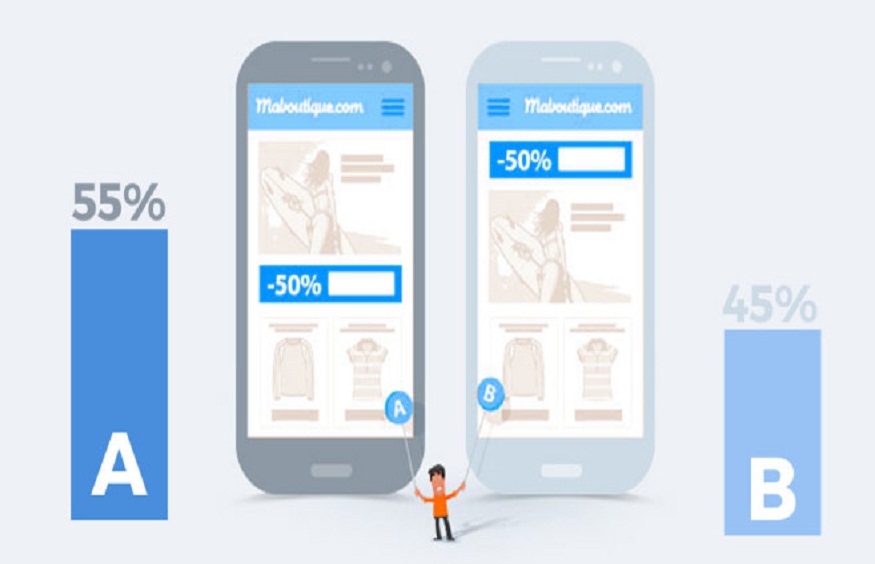

A/B testing is used to compare two or more versions of an advertisement, webpage, or any other type of digital media and communication material in order to ascertain which version performs better. This method which provides information regarding how different elements influence the behaviours of users is very vital in digital marketing. By comprehending which ad triggers the desired action, marketers can enhance ads focused on particular customer segments.

A Comprehensive Guide to Split Testing

To find out which version of a piece of content or advertisement resonates with the target audience more strongly, Split testing is a technique being used. One piece, like an advertisement, is created in several forms, which are then shown to various audience segments. The most appealing version that generates the most clicks, conversions, or engagement is identified through this method.

Using data-driven judgments to improve and fine-tune marketing campaigns’ efficacy is split testing’s primary objective. Split testing yields specific information on what truly appeals to the audience, as opposed to speculating about which aspects of an advertisement would be successful.

The Definition of A/B Testing

However, version A competes against version B in split testing to establish which of the two gets the best results. Due to the comparison of two versions of the provided website, this ab testing definition is frequently referred to as A/B testing. The first one is considered as the ‘source’, ‘original’ or ‘original translation’ text for convenience’ sake and is marked as ‘A’, while the second is called the ‘target’, ‘revised’ or ‘final’ text, marked ‘B’. A few examples of where this technique is employed in Digital Marketing include A/B testing of email campaigns, multiple landing sites, and ads.

Replacing one component (a headline, picture, or button, for instance) with a slightly different version and observing how the users’ behaviour responds to it is the gist of A/B testing. To avoid a bias created by gathering data from different audiences, the tests are done concurrently for the two versions.

The Methods of A/B and Split Testing

Deciding which piece needs to be evaluated is the first step in the split and A/B testing process. Ad headlines, landing page designs, and call-to-action button colours are a few examples of this. The creation of variations occurs after the element has been discovered. Two distinct headlines are created for an A/B test of an advertisement, for instance.

Following that, several target audience segments are shown these modifications. Critical performance indicators including click-through rate, conversion rate, and bounce rate are used to monitor each variation’s effectiveness. Which version works better can be ascertained by utilising these metrics.

A/B and Split Testing Data’s Significance

The effectiveness of split testing and A/B testing depends heavily on data. Marketers are better equipped to decide which versions are most effective when they have access to accurate and trustworthy data. The KPIs in this data include click-through rates, conversion rates, and user engagement. Marketers can understand the target audience’s preferences by examining trends and patterns in this data.

An array of analytics technologies that monitor website user activity or ad responses are usually used to collect data. To find out which version of the tested element performed better, this data is evaluated. To ensure that the results are statistically significant, it is crucial to perform the tests for long enough to collect enough data.

Split testing and A/B testing provide some challenges

A/B and split testing have their own set of drawbacks despite the advantages. The necessity of a massive quantity of site visitors is one of the major difficulties. In order to provide statistically significant findings, a large user base is necessary for the tested alterations.. Ascertaining whether the version is more effective could be challenging in the absence of significant traffic.

Bias in the test results could be another challenge. The outcomes might not be reliable if the testing audience segments are not typical of the target audience as a whole. Marketing decisions that may not work out can result from this, including inaccurate conclusions.

Nor can it be easy to determine which adjustment produced the observed result when too many elements are tested simultaneously. Testing each component separately is necessary to guarantee that the outcomes are understandable.

Split testing and A/B testing best practice

Following recommended guidelines is crucial to maximising the benefits of A/B and split testing. Testing one component at a time is a crucial best practice. This aids in determining how that component affects the advertisement’s or webpage’s performance. An additional advised practice is to make sure the test runs for an appropriate length of time. Uncertain or deceptive results may arise from testing that is concluded too soon. To make sure that the results are statistically significant, it’s also critical to employ a sufficiently high sample size.

Before beginning an exam, specific objectives should also be set. Setting a specific purpose for the test will aid in its design and result interpretation, regardless of whether the goal is to boost conversion rates or click-through rates.

Implementing A/B and Split Testing for Customised Ads

Split trying out and A/B trying out may be quite useful for customized advertising and marketing. Marketers may determine which kind of personalization most appeals to their target demographic by experimenting with various customised offers, graphics, and messaging. Consider how a clothes store’s customised advertisement might experiment with different headlines or visuals depending on the user’s past purchasing patterns.

Marketing professionals can increase the likelihood of grabbing the target audience’s attention by testing to determine the best ways to employ personalization. A higher return on investment for advertising expenditure may result from this, as well as higher engagement and conversion rates.

Conclusion

To optimise personalized ads, in particular, split testing and A/B testing are crucial techniques in digital marketing. Marketers can make data-driven decisions that result in increased engagement and conversion rates by comparing many iterations of an advertisement or webpage. Continuous improvement of marketing strategies is made possible by these testing procedures, which offer insights into what appeals to the target population.

Based on actual user data and optimised for maximum effectiveness, personalised advertising works best. Marketers may be sure that their tailored advertisements are not only extremely effective in accomplishing their objectives but also highly relevant by employing split testing and A/B testing. Though these testing techniques have drawbacks, adhering to standard practices can help you get over them and optimise split and A/B testing’s advantages in digital marketing.